Given a string, s, and a set of strings, S, find a string, k that closely resembles s. For this problem, some possible answers would be Jaro Distance, Cosine Similarity, TF-IDF, Levenshtein distance, etc., which produce a score between two strings to reliably approximate their atomic similarity. In this blog post, I’d like to explore one of my favorites, Sorensen–Dice coefficient, that achieves even better similarity scores for some cases like FQDNs.

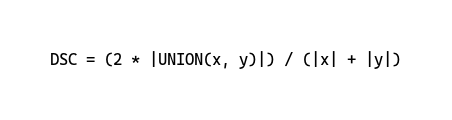

Sorensen–Dice Coefficient is ‘a statistic used to gauge the similarity of two samples.’ A sample can be a sequence of types such as a string or an integer. It can also be described mathematically as below, where the cardinality of intersection (of X and Y) is multiplied by a constant 2 (2 because the elements common in both samples were reduced through intersection). The outcome in the numerator portion is then divided by the individual cardinality of X and Y.

| |